For this year's Darwin Day post, I will be reviewing the evolutionary origins and developmental emergence of gyrification of the Mammalian brain. Gyrification occurs when the neocortex, or six layered cortex on the dorsal surface of Mammalian brains, exhibits wrinkles and folds rather than a smooth surface (lissencephaly). Gyrification is measured using the gyrification index (or GI). GI can range from 5.6 in Pilot whales (Globicephala) to 3.8 in Elephants (Loxodonta) and 2.6 in Humans (Homo) [1]. A more extensive phylogenetic analysis (Figure 1) shows the evolutionary trajectory for this in Hominids, and a highly gyrified brain is associated with other traits that emerge as early as the divergence of Primates.

Figure 1. A phylogeny of primate brain evolution (with Mammalian outgroups), with a focus on the origin of traits found in the human brain. COURTESY [2].

The evolutionary origins of gyrification may either be mono- or polyphyletic, as different genes have been identified as potential associated factors. Gyrification might also be a product of convergent evolution, as this trait may simply be a by-product of larger neocortical sheets. Steidter [3] points out that gyrification may simply be due to physical constraints related to fitting a vastly enlarged cortical sheet into a skull scaled to an organism's body size.

Figure 2. Allometric scaling across select Mammalian brain, showing an increase in gyrification for larger brains. COURTESY [4].

In Figure 2, we see that in general larger brains also have a larger GI value. The curvilinear relationship shown in the figure is known as an allometric scaling. Allometry [5] is a convenient way to quantitatively assess relative growth across different species, and the resulting regression parameters are suggestive of underlying mechanisms that control and predict growth across evolution.

In this case, the allometric relationship is brain size versus tangential expansion. Tangential matter is expansion of gray matter relative to the constraints of white matter, or a grey-to-white matter proportion [4]. As the amount of gray matter increases, brain size also tends to increase, and so does the GI value. However, the proportion of gray to white matter saturates, while brain sizes continue to expand along with increasing GI values.

Figure 3. Simulating gyrification as a by-product of physical processes. 3-D printed models based on MRI data for brains from different stages of development. COURTESY [6].

Genetic analyses implicate the role of specific genes in controlling brain volume, which then sets the stage for gyrification [7]. Developmental mutations in the human genetic loci collectively known as MCPH 1-18 [8] lead to a condition called microcephaly, where the mature microcephalic brain remains small and lacks gyrification. In a study of 34 species [9], the largest source of explained variance between species can be explained by random Brownian motion. Furthermore, the data within the order Primates shows that fold wavelength is stable (~12mm) despite a 20-fold difference in volume [9].

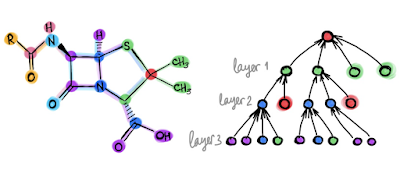

As an alternative hypothesis to evolutionary origins, gyrification can result from various physical processes in developmental morphogenesis (Figure 3). The gyrification process consists of gyral (ridge-like) and sulcal (groove-like) convolutions. In the earliest stages of development, no gyrification is expressed in the phenotype. However, as the neocortex grows faster relative to the rest of the brain, a mechanical instability results that leads to buckling [6]. Buckling thus creates gyrification, although the consistency of their localization and timing in development suggests underlying cellular and molecular mechanisms. Demonstration of biophysical mechanisms does not preclude a phylogenetic explanation, however. As we will see later on, surface physics relies upon the presence of certain cell types and growth conditions.

Figure 4. An overview of the evolution of development (Evo-Devo) of gyrification. Gyrification and lissencephaly occur through mechanisms that affect changes in brain size and GI relative to the last common ancestor (in this figure, transitional form). COURTESY [10].

There are also several cellular and molecular factors that contribute to neocortical growth, and thus towards gyrification. In Figure 4, we see four archetypes that result from increases and decreases of brain size coupled with increases and decreases of GI. For example, increases in basal radial ganglion (bRG) precursor cells and transit-amplifying progenitor cells (TAPs) contribute to increases of both brain size and GI [10]. Decreases in brain size and GI are controlled by changes in cell cycle timing and associated heterochronic changes. Heterochrony has to do with the timing of the rate and termination of growth in development and is but one factor that suggests lissencephaly is actually the derived condition. Thus, smooth brains would be an evolutionary reversal from the ancestral gyrified state that occurred multiple times across the tree of Mammals.

Once again, an evolutionary conundrum. Happy evolutioning!

NOTES:

[1] Johnson, S. Number and Complexity of Cortical Gyrii. Center for Academic Research and Training in Anthropogeny. La Jolla, CA. Accessed: February 13, 2022.

[2] Franchini, L.F. (2021). Genetic Mechanisms Underlying Cortical Evolution in Mammals. Frontiers in Cell and Developmental Biology, 9, 591017.

[3] Striedter, G. (2005). Principles of brain evolution. Sinauer, Sunderland, MA.

[4] Tallinen, T., Chung, J.Y. , Biggins, J.S., and Mahadevan, L. (2014). Gyrification from constrained cortical expansion. PNAS, 111(35), 12667-12672.

[5] Shingleton, A. (2010) Allometry: The Study of Biological Scaling. Nature Education Knowledge, 3(10), 2.

[6] Tallinen, T., Chung, J.Y., Rousseau, F., Girard, N., Lefevre, J., and Mahadevan, L. (2016). On the growth and form of cortical convolutions. Nature Physics, 12, 588–593.

[9] Heuer, K., Gulban, O.F., Bazin, P-L., Osoianu, A., Valabregue, R., Santin, M., Herbin, M., and Toro, R. (2019). Evolution of neocortical folding: A phylogenetic comparative analysis of MRI from 34 primate species. Cortex, 118, 275-291.

.png)