It's that time again -- time for the OpenWorm Annual meeting. Below are the slides I presented on progress and the latest activities in the DevoWorm group. If anything looks interesting to you, and you would like to contribute, please let me know. Click on any slide to enlarge.

November 30, 2023

August 24, 2023

Saturday Morning NeuroSim Discussion Thread: Physical Computing

Over the past three years, the Saturday Morning NeuroSim group has met weekly on Saturdays (mornings in North America). The Saturday Morning format continues in the tradition of Saturday Morning Physics and covers a wide variety of topics.

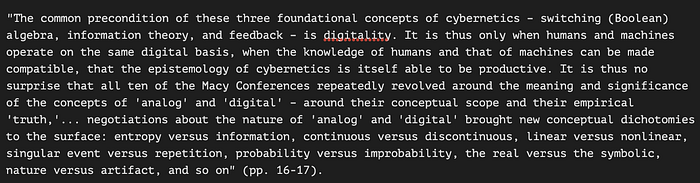

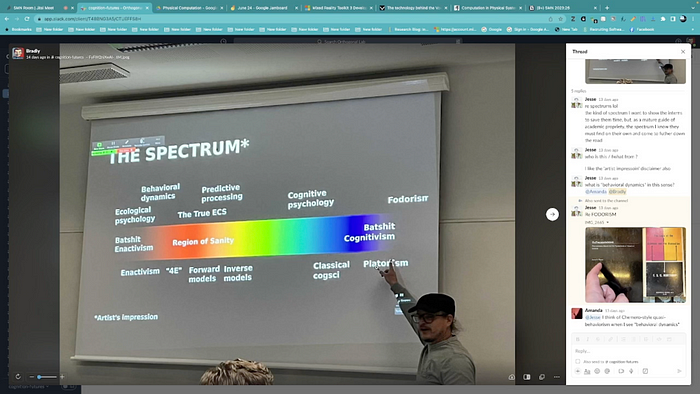

One recent lecture/discussion thread is on Physical Computation. Our approach to the topic begins with the debate around the role of computation in Cognitive Science and the Neurosciences. And so we begin in Week 1 with a discussion of the connections between computation, information processing, and the brain, largely focusing on the work of Gualtiero Piccinini and Corey Maley. A starting point for this session is their Stanford Encyclopedia of Philosophy article on “Computation in Physical Systems”. Many current assumptions about computation in the brain stem from the Church-Turing thesis, which often leads to a poor fit between model and experiment. Piccinini and Maley propose that the Church-Turing-Deutsch thesis is preferable when talking about systems that perform non-digital computations. Amanda Nelson pointed out the it makes sese to think of evolved biological systems (brains) as instances of analogue computers. Another interesting point from the session is the distinction between the digital (Von Neumann) computers and alternatives such as “physical” or “analog” computation, which would be picked up on in the next session.

Physical Computation Session I from June 24 (roughly one hour in length).

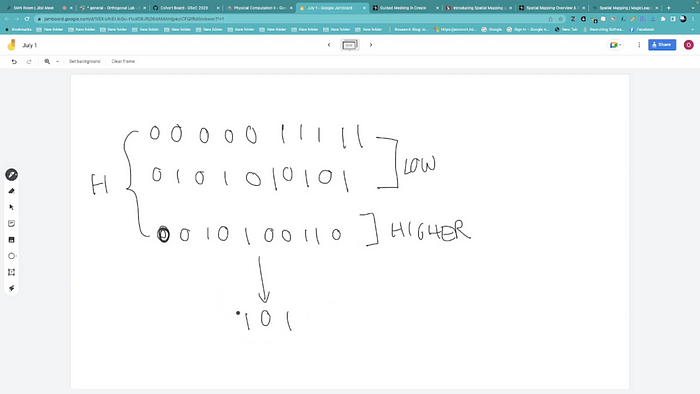

The second session focused on physical computation, and led us to discuss the idea of pancomputationalism. While pancomputationalism is the fundamental assumption behind the phrase “the brain is a computer” [1], we we also introduced to pancomputationalism in ferrofluidic systems and mycelial networks. We discussed the works of Richard Feynman (Feynman Lectures on Computation) and Edward Fredkin (Digital Physics), which helped us form an epistemic framework for computation in nature [2]. We also discussed Andy Adamatsky’s work on unconventional computation, particularly his work on Reaction-Diffusion (R-D) Automata, that while discrete in nature has connections to excitable (e.g neural) systems via the Fitzhugh-Nagumo model.

Physical Computation Session II from July 1 (roughly one hour in length)

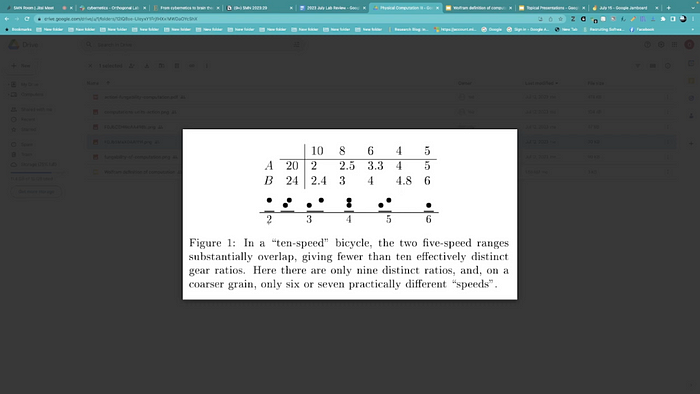

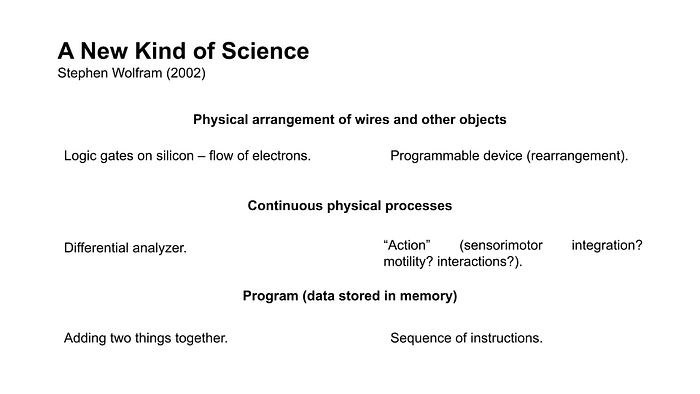

After taking a break from the topic, our July 15 meeting featured an alternative viewpoint on pancomputationalism. This was made manifest in a shorter discussion on physical computation, with views from Tomasso Toffoli and Stephen Wolfram. We covered Toffoli’s paper “Action, or the funcgability of computation”, which connects physical entropy, information, action, and the amount of computation performed by a system. This paper is of great interest to the group in light of our work and discussions on 4E (embodied, embedded, enactive, and extended) cognition [3]. Toffoli makes some provocative arguments herein, including the notion of computation as “units of action”. A concrete example of this is a 10-speed bicycle, which is not only not a conventional computer, but also has linkages to perception and action. Amanda Nelson found the notion of transformation from one unit into another particularly salient to the distinction between analogue and digital computation. The physical basis of all forms of computation can also be better defined by revisiting “A New Kind of Science” [4], in which Wolfram sketches out the essential components and analogies of a computational system with a physical substrate. We can then compare some of the more abstract aspects of a physical computer with neural systems. This is particularly relevant to engineered systems that include select components of biological networks.

Physical Computation Session III from July 15 (about 15 minutes in length)

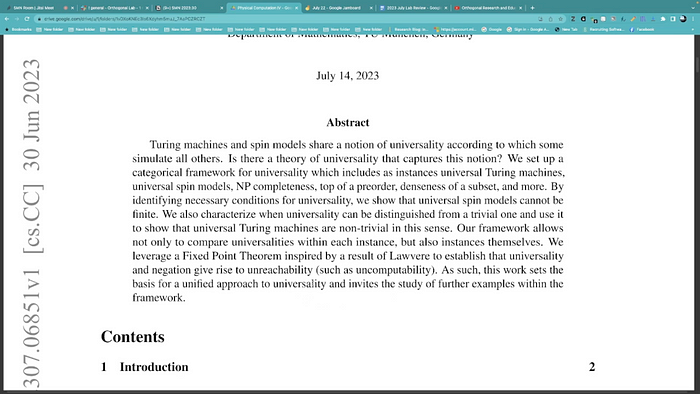

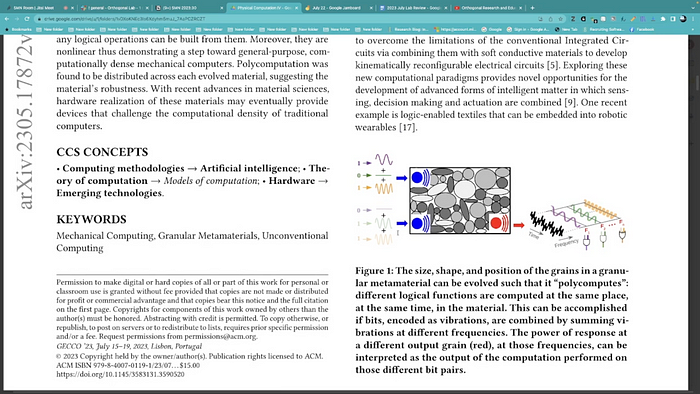

The next session followed up on computation in natural systems as well as Wolfram’s notion of universality, particularly in terms of computational models. In particular, Wolfram argues that cellular automata models can characterize universality, which is related to pancomputationalism. Universality suggests that any one computational model can capture system behavior that can be applied across a wide variety of domains. In this sense, context is not important. Rule 30 produces an output that resembles pattern formation in biological phenotypes (the shell of snail species Conus textile), but can also be used as a pseudo-random number generator [5]. In “A Framework for Universality in Physics, Computer Science, and Beyond”, this perspective is extended to understand the connections between computation defined by the Turing machine and a class of model called Spin Models. This provides a framework for universality that is useful form defining computation across the various levels of neural systems, but also gives rise to understanding what is uncomputable. This sessions natural system examples featured computation among bacterial colonies embedded in a colloidal substrate along with computation in granular matter itself. The latter is an example of non-silicon based polycomputation [6].

Physical Computation Session IV from July 22 (about 12 minutes in length).

After talking a more extended break from the topic, we returned to this discussion four weeks later (August 19). Our sixth (VI) session occurred in our August 19 meeting, and covered three topics: physical computation and topology, morphological computation, and RNA computing/Molecular Biology as universal computer.

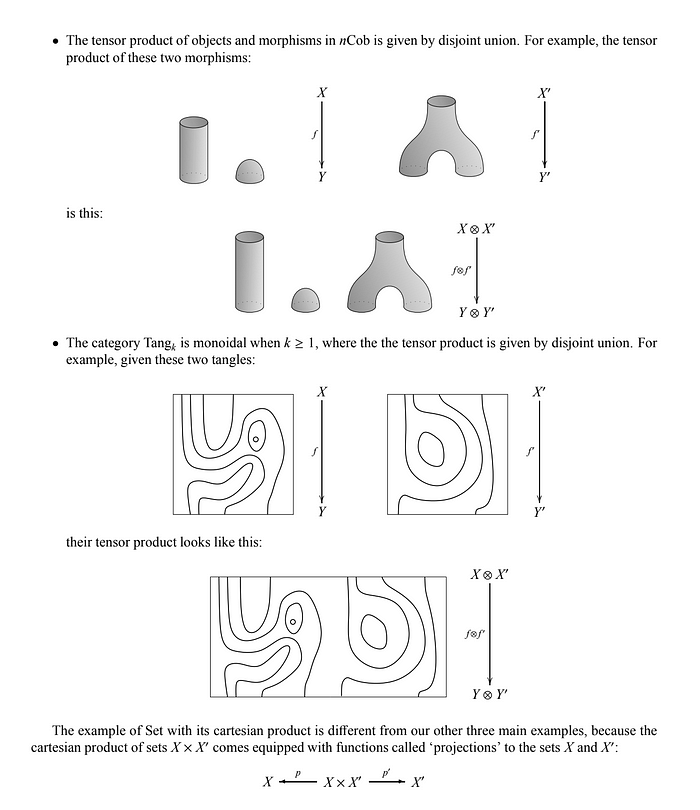

We have discussed category theory before in our discussions on Symbolic Systems and Causality. In this section, we revisited the role of category theory, but this time with reference to Physical Computation. John Carlos Baez and Mike Stay give a tour of category theory’s role in computation via topology. The idea is that category theory forms analogies with computation, which can be expressed on a topological surface/space.

Computable Topology, Wikipedia.

Baez, J. and Stay, M. (2009). Physics, Topology, Logic and Computation: A Rosetta Stone. arXiv, 0903.0340.

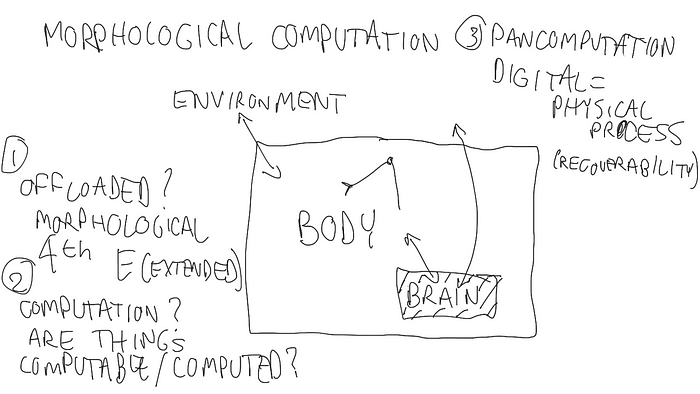

We aslo covered the role of Morphological Computation by reviewing three papers on this form of physical computation that intersects with digital computational representations. Morphological Computation is the role of the body in the notion of “cognition is computation”. One idea that is critiqued with in these papers is offloading from the brain to the body. Offloading is moving computational capacity from the central nervous system to the periphery. If you grab a ball with your hand, you recognize and send commands to grasp the ball, but you must grasp and otherwise manipulate the object to fully compute the object. Thus, this capacity is said to be offloaded to the hand or peripheral nervous system.

Interestingly, offloading and embodiment are integral parts of 4E (Embodied, Embedded, Enactive, and Externalized) Cognition, which itself critiques the brain as computation idea. But as an analytical tool, morphological computation is much more utilitarian than Cognitive Science theory, and is concerned with how the robotic bodies and other mechanical systems interact with an intelligent controller. In non-embodied robotics, body dynamics is treated as noise. But in morphological computation, body dynamics play an integral role in the intelligent system and contribute to a dynamical system.

Muller, V.C. and Hoffmann, M. (2017). What Is Morphological Computation? On How the Body Contributes to Cognition and Control. Artificial Life, 23, 1–24.

Fuchslin, R.M., Dzyakanchuk, A., Flumini, D., Hauser, H., Hunt, K.J., Luchsinger, R.H., Reller, B., Scheidegger, S., and Walker, R. (2013). Morphological Computation and Morphological Control: Steps Toward a Formal Theory and Applications. Artificial Life, 19, 9–34.

Milkowski, M. (2018). Morphological Computation: Nothing but Physical Computation. Entropy, 20, 942.

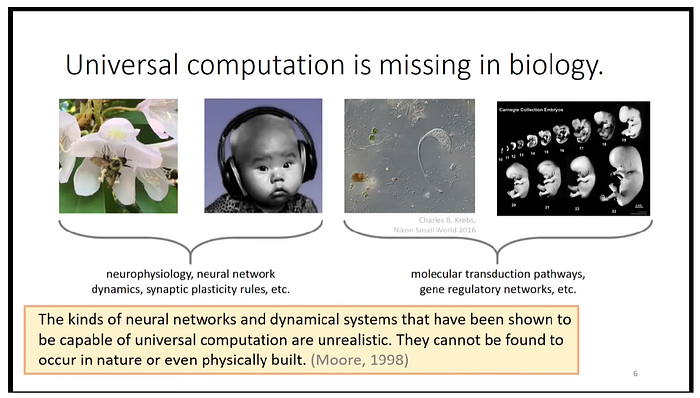

While these papers do not get too deeply into the role of pancomputation in Morphological Computation, it is implicitly stated and plays a central role in our last topic: RNA computing and Molecular Biology. For more information, see this talk on YouTube and the paper below. Basically, while the pancomputationalism perspective is missing from biology, the structure and potential function of DNA and RNA provide a route to phycial computation.

Akhlaghpour, H. (2022). An RNA-based theory of natural universal computation. Journal of Theoretical Biology, 537, 110984.

Thanks to Morgan Hough for joining us from Hawaii (4:00 am!) on August 19.

References

[1] Richards, B.A. and Lillicrap, T.P. (2022). The Brain-Computer Metaphor Debate Is Useless: A Matter of Semantics. Frontiers in Computational Science, 4, 810358.

Should we just simply “shut up and calculate”, or debate some more?

[2] Fredkin, E. (2003). An Introduction to Digital Philosophy. International Journal of Theoretical Physics, 42(2), 189.

This work is the Rosetta Stone for many comparisons between modern AI systems and human-like intelligence, at least in terms of computation.

[3] Newen, A., DeBruin, L., and Gallagher, S. (2018). The Oxford Handbook of 4E Cognition. Oxford University Press.

[4] Wolfram, S. (2002). A New Kind of Science. Wolfram Media.

This is a link to the 20th Anniversary edition, with a full set of Cellular Automata rules, defined by number.

[5] Zenil, H. (2016). How can I generate random numbers using the Rule 30 Cellular Automaton? Quora post.

[6] Bongard, J. and Levin, M. (2023). There’s Plenty of Room Right Here: Biological Systems as Evolved, Overloaded, Multi-Scale Machines. Biomimetics, 8(1), 110.

Saturday Morning NeuroSim Discussion Thread: Causality

Over the past three years, the Saturday Morning NeuroSim group has met weekly on Saturdays (mornings in North America). The Saturday Morning format continues in the tradition of Saturday Morning Physics and covers a wide variety of topics.

Our discussion thread on causality begins with Causality and Circles on May 13. From a Mastodon post by Yohan John, we considered how spatialized diagrams are confused with temporal sequences in a feedback loop. We also covered three papers in this session.

Vernon, D., Lowe, R., Thill, S., and Ziemke, T. (2015). Embodied cognition and circular causality: on the role of constitutive autonomy in the reciprocal coupling of perception and action. Frontiers in Psychology, 6, 1660.

Raginsky, M. (2023). Directed Information and Pearl’s Causal Calculus. arXiv, 1110.0718.

Laland, K.N., John Odling-Smee, J., Hoppitt, W., and Uller, T. (2013). More on how and why: cause and effect in biology revisited. Biological Philosophy, 28, 719–745.

Our conversation continued after the last week of Neuromatch Academy, when the NMA curriculum featured causal networks. Our July 29 meeting featured a collection of references on Bayesianism, Probabilistic Graphical Models, methods of integration, time-series applications, and more. Some core readings are given below.

Stanford Encyclopedia of Philosophy: causal models. This article takes an epistemological approach and provides us with a baseline for structural equation model, graphical probabilistic models, and other statistical formulations of causal relationships.

Daphne Koller’s Probabilistic Graphical Models course. Hosted on Stanford University’s Open Classroom platform, this course includes units on representation, inference, learning, and causation. The causation unit covers decision theory, utility functions, influence diagrams, and the notion of perfect information.

Pearl, J. (2000). Causality. Cambridge Press, Cambridge, UK. This classic book by Judea Pearl builds from a theory of inferred causation, starting at causal diagrams, and continuing through direct effects, indirect effects, confounds, counterfactuals, bounding effects, and probabilities. The book also covers structural models, decision analysis, and Simpson’s Paradox as the basis for methods for detecting causal relationships.

Scholkopf, B. (2019). Causality for Machine Learning. arXiv, 1911.10500.

Heckman, J.J. (2005). The Scientific Model of Causality. Sociological Methodology, 35, 1–97. Causality from an econometrics point-of-view. Counterfactuals are a set of possible outcomes generated by determinants. A causal effect is defined by the change in the manipulated factor where amongst a set of factors, in a situation where all but one is held constant.

Taskesen, E. (2021). A step-by-step guide in detecting causal relationships using Bayesian structure learning in Python. Towards Data Science, September 7.

Which variables have a direct causal effect on a target variable? Hint: association and correlation are not equivalent to causation.

Bayesian Models:

Neuberg, L.G. (2003). Causality: models, reasoning, and inference. Econometric Theory, 19, 675–685.

Pearl, J. (2001). Bayesianism and causality, or, why I am only a half-Bayesian. In “Foundations of Bayesianism”, pgs. 19–36. Kluwer Press.

Methods of Interaction: networks and non-directional graphs, as opposed to directed acyclic graphs (DAGs), require a different set of considerations. The methods below cover highly interacting systems like graphs and how change over time can be properly interpreted as causal.

Leng, S., Ma, H., Kurths, J., Lai, Y-C., Lin, W., Aihara, K., and Chen, L. (2020). Partial cross mapping eliminates indirect causal influences. Nature Communications, 11, 2632.

Park, S.H., Ha, S., and Kim, J.K. (2023). A general model-based causal inference method overcomes the curse of synchrony and indirect effects. Nature Communications, 14, 4287.

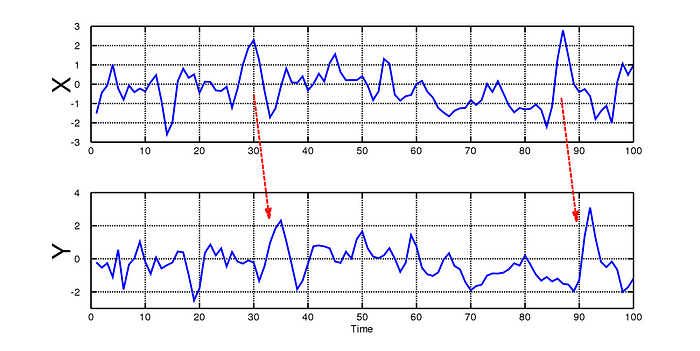

Time-series using Granger Causality: the first two references apply Granger Causality to time-series datasets. In such cases, the datapoints are dependent with respect to time. Given two time-series x and y, x is the cause of y if x predicts y (lagged with respect to x over a certain time interval) given x and prior values of y. This is in comparison with simply predicting the current value of y given previous values of y, which would be the counterfactual case.

The final paper in the group (Stokes and Purdon) critiques Granger Causality from a Neuroscience perspective.

Carlos‐Sandberg, L. and Clack, C.D. (2021). Incorporation of causality structures to complex network analysis of time‐varying behaviour of multivariate time series. Scientifc Reports, 11, 18880.

Runge, J., Nowack, P., Kretschmer, M., Flaxman, S., Sejdinovic, D. (2019). Detecting and quantifying causal associations in large nonlinear time series datasets. Science Advances, 5(11), aau4996.

Stokes, P.A. and Purdon, P.L. (2017). A study of problems encountered in Granger causality analysis from a neuroscience perspective. PNAS, 114(34), E7063-E7072.

The third session (August 5) was a focus on causality specifically as it is treated in Neuroscience. This session followed up on a Twitter debate by Kording Lab and Earl Miller about the role of causality in neuroscience. The consensus to the question “Why is Neuroscience so into causality?” was that it provides a means to identify mechanisms for function. Causality in neuroscience differs from philosophical discussions about causality in that Neuroscience must infer causality from data, while philosophers (and statisticians) do the work of proving causality.

One interesting point from Kording Lab is that there is a difference between proximate causes and ultimate causes. In some fields, causality is obvious and so causal methods are not always necessary. But Neuroscience is partially about the behavioral substrate, and so we can turn to Niko Tinbergen’s four questions. The four questions concern 1) how a trait arose in development (proximate, dynamic), 2) how a trait arose in evolution (ultimate, dynamic), 3) what is the mechanism or structure of a trait (proximate, static), and 4) what is the adaptive value or function of a trait (ultimate, static).

You can read more about Tinbergen’s four questions and their causal implications in the following papers.

Beer, C. (2020). Niko Tinbergen and questions of instinct. Animal Behaviour, 164, 261–265.

Nesse, R.M. (2019). Tinbergen’s four questions: two proximate, two evolutionary. Evolution, Medicine, and Public Health, 2, doi:10.1093/ emph/eoy035.

Mayr, E. (1961). Cause and effect in biology. Science, 134, 1501–1506.

The other papers from this session focused on mental representations and causal functional connectivity in the brain, respectively.

Sloman, S.A. and Lagnado, D. (2015). Causality in Thought. Annual Reviews in Psychology, 66, 223–247.

While Bayesian approaches are good for theory-building, they are an incomplete account of what goes on in the cognitive world.

Biswas, R. and Shlizerman, E. (2022). Statistical perspective on functional and causal neural connectomics: The Time-Aware PC algorithm. PLoS Computational Biology, 18(11), e1010653.

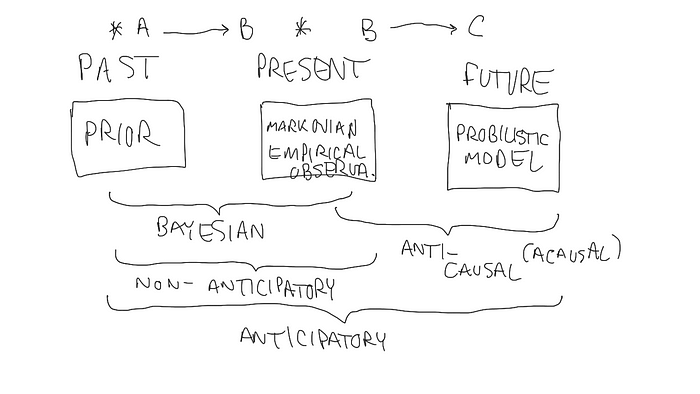

The fourth session (August 19) picks up on a point covered in the second session, namely how causality can be inferred from network data. This covers related ideas of transitivity, weak interactions, and anti-causal models. Papers for this session include networks in ecology, anticipative and non-anticipative control theory, and anti-causal systems.

Naghshtabrizi, P. and Hespanha, J.P. (2006). Anticipative and non-anticipative controller design for network control systems. Lecture Notes in Control and Information Science, 331.

Sugihara, G., May, R., Ye, H., Hsieh, C-H., Deyle, E., Fogarty, M., Munch, S. (2012). Detecting Causality in Complex Ecosystems. Science, 338, 496–500.

Chattopadhyay, I. (2014). Causality Networks. arXiv, 1406.6651.

Anticausal System. Wikipedia.

McCurdy, T. (2007). Causal Systems: understanding the basics. Physics Forums. September 23.

Finally, some fields (cell and molecular biology) have working models of causation that while useful, are not particularly illuminating. In the cell and molecular biology example, the traditional model of necessity and sufficiency (a mechanism being necessary but not sufficient) can be criticized for not being complete with respect to incorporating counterfactuals or multiple potential causes. See this paper for more information:

Bizzarri, M., Brash, D.E., Briscoe, J., Grieneisen, V.A., Stern, C.D., and Levin, M. (2019). A call for a better understanding of causation in cell biology. Nature Reviews Molecular Cell Biology, 20, 261–262.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)